Kinect 2 for Windows Demo App

The Hands On Labs to complete a sample application for Windows 8.1 and the Kinect 2 for Windows

Kinect 2 Hands On Labs

Lab 4: Displaying Depth Data

Lab 4: Displaying Depth Data

Estimated Time to Complete: 15 min

This lab is part of a series of hands on labs which teach you how to create a Windows 8.1 Store Application using almost every available feature of the Kinect 2. This is the fourth lab in the series, and it teaches you how to retrieve the depth feed from the Kinect, along with the feeds from the previous labs.

This lab will explain the following:

- How to select the new depth frame.

- How to get the new depth frame type from the Multi Source Frame.

- How to convert the depth frame data to a color bitmap.

This lab comes with a starting point code solution and a completed code solution of the exercises.

Exercise 1 - Displaying the Depth Frame

This exercise will teach you how to retrieve a depth frame in a Kinect for Windows 2 application for Windows 8.1. This lab and all subsequent labs in this series are built using C# and assumes you have a fundamental knowledge of the C# language.

The screenshots here are from Visual Studio Pro 2013 Update 2, the Community Edition is identical.

This lab builds upon the previous lab, which integrated the multi-source frame reader. The process for getting a color bitmap from the depth data is very similar to the method used for the infrared frame in the first lab.

To understand the Depth Frame, you must first look at the Kinect 2 device itself. While it is on, the Kinect 2 has a fuzzy red light at its center. This is an Infrared Emitter and it shoots out a lot of dots like thousands of invisible laser pointers. There is another camera which sees the dots, and measures the time it took for the light to fire, and then become visible. By using this time of visibility, the Kinect 2 can determine how far a single point is from the camera, using the time it took for the IR beam to get there.

To retrieve and use depth frames from the Kinect 2 using the MultisourceFrameReader, follow the steps below:

-

Open the existing Kinect 2 Sample solution in Visual Studio, or the copy you have from the end of the previous lab.

-

To begin, you will add a new DisplayFrameType to the enum. Then you will need new depth frame data arrays to handle the data conversion. Open the MainPage.xaml.cs file in the Solution Explorer. Copy the following highlighted code to do this:

namespace Kinect2Sample { public enum DisplayFrameType { Infrared, Color,Depth} public sealed partial class MainPage : Page, INotifyPropertyChanged { //... //Infrared Frame private ushort[] infraredFrameData = null; private byte[] infraredPixels = null;//Depth Frameprivate ushort[] depthFrameData = null;private byte[] depthPixels = null;public event PropertyChangedEventHandler PropertyChanged; //... } } -

To initialize the new variables you have the SetupCurrentDisplay method which is called every time the current display changes. That method is where the initialization logic for the depth frame belongs. There is a switch statement which uses the currentDisplayFrameType to determine what to initialize and the size of the bitmap. You can add a new case for the depth frame in this switch statement. To do this, copy the highlighted code below:

private void SetupCurrentDisplay(DisplayFrameType newDisplayFrameType) { currentDisplayFrameType = newDisplayFrameType; switch (currentDisplayFrameType) { case DisplayFrameType.Infrared: //... break; case DisplayFrameType.Color: //... break;case DisplayFrameType.Depth:FrameDescription depthFrameDescription =this.kinectSensor.DepthFrameSource.FrameDescription;this.CurrentFrameDescription = depthFrameDescription;// allocate space to put the pixels being// received and convertedthis.depthFrameData =new ushort[depthFrameDescription.Width *depthFrameDescription.Height];this.depthPixels =new byte[depthFrameDescription.Width *depthFrameDescription.Height *BytesPerPixel];this.bitmap =new WriteableBitmap(depthFrameDescription.Width,depthFrameDescription.Height);break;default: break; } }This is similar to the setup of the infrared frame variables. You may wonder why two distinct variables are needed when they are the same type and size and not used together. That’s because the infrared and the depth frame data could be used together for advanced cases such as removing the background of the bitmap.

There is one more thing to do in order to properly initialize the Depth Frame, and that is to add the new FrameSourceType when the MultiSourceFrameReader is opened. In the MainPage constructor add the FrameSourceTypes.Depth to the following line, like so:

this.multiSourceFrameReader = this.kinectSensor.OpenMultiSourceFrameReader( FrameSourceTypes.Infrared | FrameSourceTypes.Color |FrameSourceTypes.Depth); -

Now that the frame variables are initialized, you can setup the logic to run when the frame arrived within Reader_MultiSourceFrameArrived. Once again, there is a switch statement here to handle the other frame types so it’s a matter of adding the new DisplayFrameType of Depth. To do this copy the highlighted code below:

private void Reader_MultiSourceFrameArrived(MultiSourceFrameReader sender, MultiSourceFrameArrivedEventArgs e) { //... switch (currentDisplayFrameType) { case DisplayFrameType.Infrared: //... break; case DisplayFrameType.Color: //... break;case DisplayFrameType.Depth:using (DepthFrame depthFrame =multiSourceFrame.DepthFrameReference.AcquireFrame()){ShowDepthFrame(depthFrame);}break;default: break; } } -

ShowDepthFrame is a new method that will need to be created. While the ShowColorFrame method simply gets the color frame and converts it to the bitmap,

the DepthFrame does not contain color information, and is raw depth data when it arrives.

Some of the depth data may be out of reliable bounds so it must be shaped to exist within something that can be calculated with confidence.

ShowDepthFrame will first make sure the frame data is what is expected, then shape it to become reliable.

Then it passes the data onto conversion to pixels before rendering to a bitmap. To make the ShowDepthFrame method, copy the code below:

private void ShowDepthFrame(DepthFrame depthFrame){bool depthFrameProcessed = false;ushort minDepth = 0;ushort maxDepth = 0;if (depthFrame != null){FrameDescription depthFrameDescription =depthFrame.FrameDescription;// verify data and write the new infrared frame data// to the display bitmapif (((depthFrameDescription.Width * depthFrameDescription.Height)== this.infraredFrameData.Length) &&(depthFrameDescription.Width == this.bitmap.PixelWidth) &&(depthFrameDescription.Height == this.bitmap.PixelHeight)){// Copy the pixel data from the image to a temporary arraydepthFrame.CopyFrameDataToArray(this.depthFrameData);minDepth = depthFrame.DepthMinReliableDistance;maxDepth = depthFrame.DepthMaxReliableDistance;depthFrameProcessed = true;}}// we got a frame, convert and renderif (depthFrameProcessed){ConvertDepthDataToPixels(minDepth, maxDepth);RenderPixelArray(this.depthPixels);}}The depth frame comes with data relating to a reliable distance at which it can know (with accuracy) what the depth is. There is a minimum reliable distance, which is about 50cm from the device, and a max reliable distance, about 5m from the device. The depth frame will contain points which are beyond these limits, but they are not used by the Kinect because they are not reliable. Displaying these far or close points on a bitmap will result in those areas being error prone as the Kinect struggles to determine a realistic depth for a point it cannot see clearly. So the data points are shaped in the ConvertDepthDataToPixels method, you will do that next.

-

The ConvertDepthDataToPixels method iterates through each point in the depth frame and finds a matching color for it based on the intensity. The intensity is a conversion of the actual depth, but on a scale of 0 to 255 so it fits in a byte, and therefore can be used as a color. Also this method checks if the depth is within the reliable range, and makes the resulting intensity 0 (black) if it’s not. Copy the new ConvertDepthDataToPixels method below:

private void ConvertDepthDataToPixels(ushort minDepth, ushort maxDepth){int colorPixelIndex = 0;// Shape the depth to the range of a byteint mapDepthToByte = maxDepth / 256;for (int i = 0; i < this.depthFrameData.Length; ++i){// Get the depth for this pixelushort depth = this.depthFrameData[i];// To convert to a byte, we're mapping the depth value// to the byte range.// Values outside the reliable depth range are// mapped to 0 (black).byte intensity = (byte)(depth >= minDepth &&depth <= maxDepth ? (depth / mapDepthToByte) : 0);this.depthPixels[colorPixelIndex++] = intensity; //Bluethis.depthPixels[colorPixelIndex++] = intensity; //Greenthis.depthPixels[colorPixelIndex++] = intensity; //Redthis.depthPixels[colorPixelIndex++] = 255; //Alpha}}There is a new variable in this method called mapDepthToByte. This int is simply to map the max depth value to the max byte value (256).

- The final step (converting to a bitmap) is the same as the infrared frame bitmap conversion, so the same method is used, but it’s passed a different array of pixels. RenderPixelArray was already written in step 5 so all that remains is to make a button to switch to this new frame type.

-

Open the MainPage.xaml and add a new button called Depth, with a click event, like so:

<ScrollViewer Grid.Row="2" ScrollViewer.HorizontalScrollBarVisibility="Auto" ScrollViewer.VerticalScrollBarVisibility="Auto"> <StackPanel Orientation="Horizontal"> <Button Content="Infrared" Style="{StaticResource FrameSelectorButtonStyle}" Click="InfraredButton_Click"/> <Button Content="Color" Style="{StaticResource FrameSelectorButtonStyle}" Click="ColorButton_Click"/><Button Content="Depth"Style="{StaticResource FrameSelectorButtonStyle}"Click="DepthButton_Click"/></StackPanel> </ScrollViewer>Then open the code behind file: MainPage.xaml.cs and add the DepthButton_Click method:

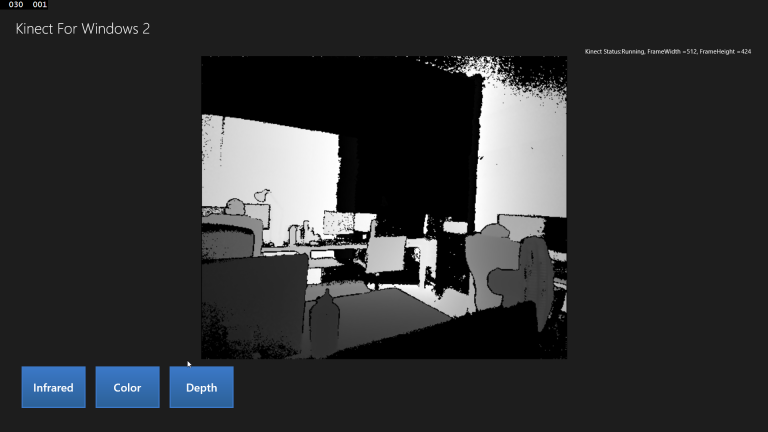

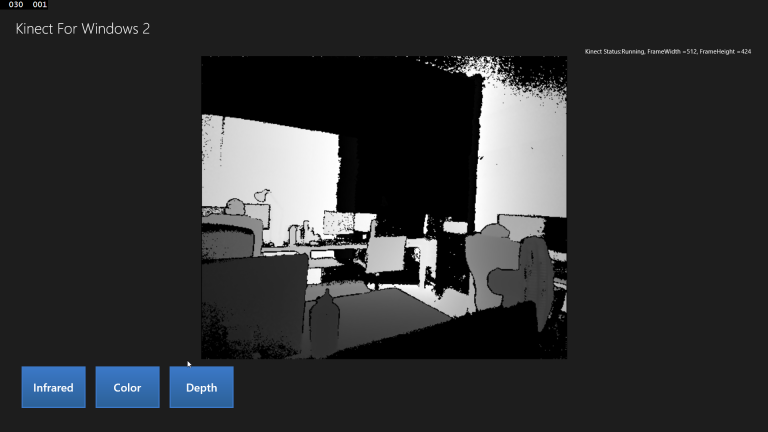

private void DepthButton_Click(object sender, RoutedEventArgs e){SetupCurrentDisplay(DisplayFrameType.Depth);} - Build and Run the application. Click the Depth button and the Depth Frame will show!.

Although it looks similar to the infrared frame, the data is different. Here, the whiteness of a pixel refers to that point’s distance from the camera (whiter is further away). This is a visualization of distance data, which is not usually expressed through a single 2D image. Notice how the object in the bottom/right of the screen is pitch black, and the farthest corner is also pitch black. These areas are outside the DepthMaxReliableDistance and DepthMinReliableDistance, and so have been set to black in the code. Also notice how the entire image appears jittery and fuzzy around edges. This is because it’s not representing a visual feed, and instead representing calculations of time which has a margin of error associated when computing at such high speeds.

-

As an extra activity, you can look back where the maxDepth is retrieved from the DepthMaxReliableDistance in the ShowDepthFrame method and change it to a larger number instead of the reliable max, say 8000. The results are quite interesting, but, as discussed, big distances are unreliable for the Kinect to calculate as real points, the fuzziness of the image will increase at large distances.

Summary

This lab taught you how to retrieve and use an depth frame and others from the MultiSourceFrameReader, and use that frame data to make a visible bitmap to be rendered in xaml. The user can switch between the three feeds with buttons.

You may have noticed the resolution of the Depth Frame matches the resolution of the Infrared Frame at 512 by 424. That’s because the IR camera and the IR emitter work together to get body data, while the color feed is for getting a real color picture. There are many more dots which are emitted than points in the depth frame (More than 217088 (512 x 424) dots), but the dots are used together and converted into 217088 points of depth so they can be reliably calculated later into a 3D model or the prediction of a body. If users had a night vision camera they could perhaps see the dots being spread across the room from the IR emitter in the Kinect 2.

You may have seen 3D modelling applications which use the Kinect 2 to scan an object into a virtual world model. The depth frame is used to achieve this. Another word for the Depth Frame is a Point Cloud: A point cloud is a collection of 3D points in world space, which then can be used to create a mesh by connecting the points. In this lab we are using the 2D point of the Depth point as an x,y co-ordinate in the total resolution of the frame, then using the z depth value to change the color of that point, reflecting its depth. In a 3D application you could simply use the x, y and z depth as a 3D point to position the points in depth frame in a more natural world with a camera to visualize the resulting point cloud.

In the next lab, you will implement a new visual into the application, the Body Data.

There is code available which is the completed solution from the work in this lab. The next lab will begin from this code.